Website ranking optimization, the vast majority of people will pay attention to the issue of inclusion,哈尔滨营销型网站,哈尔滨网络营销外包 because everyone knows this truth, the site will only be ranked according to inclusion, so it can be said that the basis of the site ranking is inclusion, the number of inclusions will also determine the probability of ranking. Next, the editor will share some suggestions on how to promote the website to be included by Baidu quickly.

1. Actively submit links to Baidu

Baidu Link Submission Tool can actively push data to Baidu. Link Submission Tool can shorten the time spider and crawler find the website. It is generally recommended that the website be submitted to Baidu at the first time after updating, or to Baidu after updating. This tool can submit any website content. Baidu will process links according to the inclusion criteria, 哈尔滨营销型网站,哈尔滨网络营销外包but it is not 100% of the content submitted.

2. Sitemap Map Creation

Sitemap is usually an XML file. When you open it, you will find that it contains a lot of code,哈尔滨营销型网站,哈尔滨网络营销外包 website address, time and date, etc. In fact, this content is for search engine spider crawlers to grab, search engine spiders like the content of website maps. Sitemap has not only XML files but also txt, HTML files named after sitemap. XML files are very useful for most search engines. HTML map files are very friendly to Baidu.

3. Add Baidu Auto Push Code

Baidu Auto Push is a tool launched by Baidu Search Resource Platform to improve the speed of web page discovery. It installs pages that automatically push JS code. When the pages are updated and accessed, the page URL will be immediately pushed to Baidu to achieve the goal of fast page inclusion.

4. Robots File Creation

Tell search engines what to include and not what to include.哈尔滨营销型网站,哈尔滨网络营销外包 Robots file size recommendation does not exceed 48k, more than 48k, it is generally difficult to be retrieved by search engines. Robots has many writing rules, most files are allowed to crawl, unless some privacy-related content can be added and not allowed to crawl. Adding sitemap map to Robots file can achieve the result of fast search engine inclusion.

![网络营销外包|营销型网站|手机网站|小程序制作|独立站|升圳网—[官方网站]](/themes/pc/images/logo.png)

![网络营销外包|营销型网站|手机网站|小程序制作|独立站|升圳网—[官方网站]](/themes/pc/images/tel.png)

![网络营销外包|营销型网站|手机网站|小程序制作|独立站|升圳网—[官方网站]](/themes/pc/images/ty.jpg)

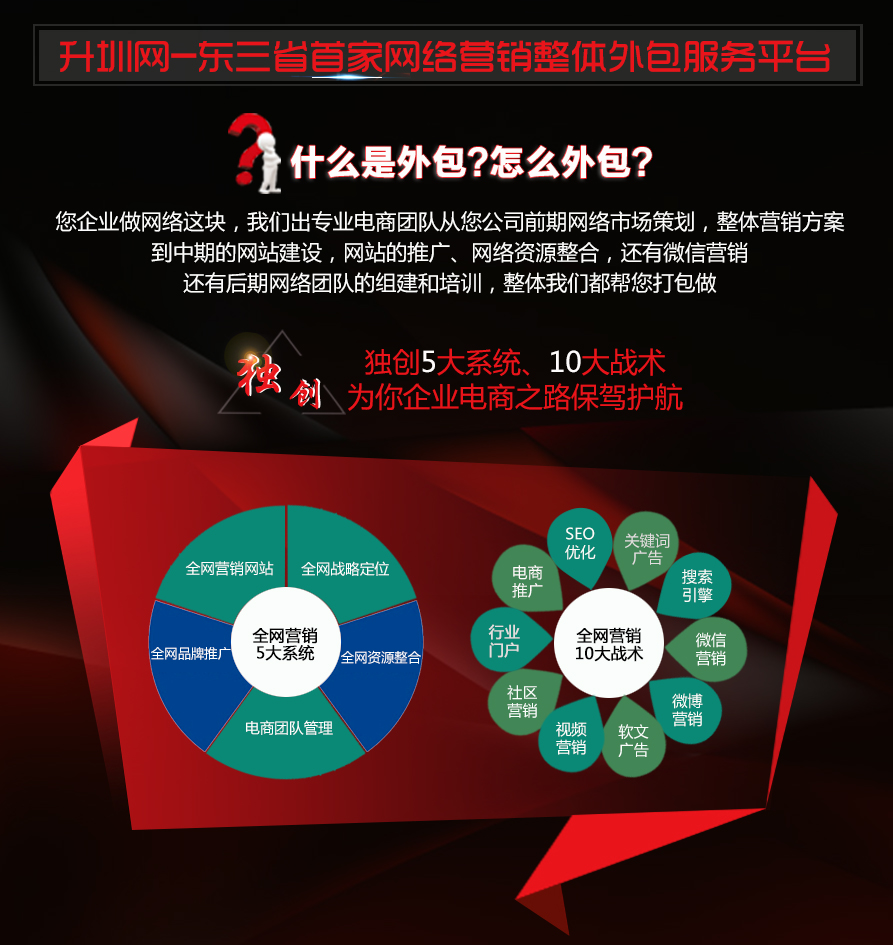

网络营销外包

网络营销外包 营销型网站

营销型网站 微信小程序

微信小程序 微信运营

微信运营